Application of Statistics in Physics

Claimed by Edwin Solis (April 16th, Spring 2022) Claimed by Julianne Latimer (April 13th, Spring 2025)\

With the development of Quantum Mechanics and Statistical Mechanics, the subject of Statistics has become quintessential for understanding the foundation of these physical theories.

Mathematical Foundation

Probability

Probability is the numerical description of the likelihood of an event occurring from a sample space written as a value between 0 and 1. This event is just the outcome of executing an experiment, and the sample space is just the whole set of outcomes possible from this experiment. Usually, the probability for equally likely outcomes is written mathematically as

[math]\displaystyle{ P(A)=\frac{\text{# Number of times A occurs}}{\text{# Total number of outcomes}} }[/math]

For example, for a six face fair dice, we have a sample space [math]\displaystyle{ S=\{1, 2, 3, 4, 5, 6\} }[/math], where the probability of obtaining a 3 from a dice throw is given by [math]\displaystyle{ P(X=3)=\frac{1}{6} }[/math]. Here the dice throw is the experiment while the event is the outcome of the number obtained from the dice.

Note that we then define the probability of being in the sample space [math]\displaystyle{ P(S) = 1 }[/math] as an axiom that is congruent with the definition of probability.

More generally, however, we can define a probability space for an experiment with discrete outcomes as a mathematical function called probability mass function (pmf) [math]\displaystyle{ p(x) }[/math]. In the dice example, the pmf would be written as

[math]\displaystyle{ \begin{align} P(X=x) = p(x)=\begin{cases} \frac{1}{6} &\;|\;x=1,2,3,4,5,6\\ 0 &\;|\;otherwise \end{cases} \end{align} }[/math]

Now, consider an experiment as throwing a dart into a dartboard or measuring the length of a piece of rope. In both cases, we expect that there be infinitely points that are infinitely close to each other where the dart can land and values of length that the rope can have. Therefore, in this case, we find ourselves with a set of continuous values inside the sample space. As there are uncountable possible non-zero probabilities for these points, we would end up with a diverging value for [math]\displaystyle{ P(S) }[/math] which goes against the definition.

Therefore, for these continuous cases, we define the mathematical function, the probability density function (pdf) [math]\displaystyle{ f(x) }[/math]. Instead of assigning a probability for an outcome to take a specific value, the pdf assigns a probability to an interval of values that the outcome could come to have.

For example, if a there is a uniform probability of a bus arriving between [math]\displaystyle{ 10:00\text{ am} \text{ and } 11:00 \text{ am} }[/math], then the pdf of arriving [math]\displaystyle{ t }[/math] minutes after [math]\displaystyle{ 10:00\text{ am} }[/math] would be written as: [math]\displaystyle{ f(x) = \begin{cases} \frac{1}{60} &\;|\;0\leq x \leq 60\\ 0 &\;|\; otherwise \end{cases} }[/math] To obtain the actual probability we integrate over the interval we desire to evaluate. So, for the probability of the bus arriving during the first 10 minutes we would have:

[math]\displaystyle{ P(0\leq X \leq 10) = \int^{10}_0{f(x)\text{d}x} = \frac{1}{6} }[/math]

Therefore, we have the following properties:

Discrete Sample Space

For the pmf [math]\displaystyle{ p(x) }[/math] with [math]\displaystyle{ n }[/math] possible events,

- [math]\displaystyle{ p(x)\geq 0 }[/math] if [math]\displaystyle{ x\in S }[/math], else [math]\displaystyle{ p(x) = 0 \text{ for } x\notin S }[/math]

- [math]\displaystyle{ P(X=x) = p(x) }[/math]

- [math]\displaystyle{ \sum_{x_i\in S}P(X=x_i) = 1 }[/math] with [math]\displaystyle{ S=\{x_1, x_2,...\} }[/math]

Continuous Sample Space

For the pdf [math]\displaystyle{ f(x) }[/math],

- [math]\displaystyle{ f(x)\geq0 }[/math]

- [math]\displaystyle{ P(a\lt X\lt b)=\int^b_a{f(x)\,\text{d}x} }[/math] from which follows that the probability of a specific value is [math]\displaystyle{ P(X=c)=\int^c_c{f(x)\,\text{d}x}=0 }[/math] if [math]\displaystyle{ f(c) }[/math] is finite.

- [math]\displaystyle{ P(-\infty\lt X\lt \infty)=\int^{\infty}_{-\infty}{f(x)\,\text{d}x}=1 }[/math]

Independece and Exclusiveness

Events can have the properties of being independent, which means the probability of each occurring is separate from the probability of each other. It's mathematically written as [math]\displaystyle{ P(A\cap B)=P(A)P(B) }[/math].

Exclusiveness, on the other hand, represents that both events cannot occur simultaneously. Either one or the other occurs, but not both. This is written as [math]\displaystyle{ P(A\cap B)= 0 }[/math].

Finally, the inclusion-exclusion principle applies to probabilities as well by the relation: [math]\displaystyle{ P(A\cup B)=P(A) + P(B) - P(A\cap B) }[/math].

Random Variables and Distributions

From the base definition of probability, we can go a step further and deal with outcomes of experiments as their own variable. The outcome of a random experiment is called a Random Variable (r.v.). This variable does not have a definite value per se, rather, it possesses certain properties linked to the underlying sample space of the experiment. This means that all the possible events in the sample space are specific values a random variable can attain.

Using the dice example, the experiment of throwing the dice results in the outcome [math]\displaystyle{ X }[/math] which is the random variable of the result of the dice. [math]\displaystyle{ X }[/math] can take the values [math]\displaystyle{ 1,2,3,4,5,6 }[/math]. We can also define a random variable [math]\displaystyle{ Y }[/math] to be the sum of two consecutive dice throws, so [math]\displaystyle{ Y }[/math] can take the values [math]\displaystyle{ 1 \text{ to } 12 }[/math]. The properties of the random variable depend on the probability function we use for the sample space. This means there are two types of a random variable: discrete which is described by the probability mass function, and continuous which is described by the probability density function (sometimes, also described by the cumulative distribution function cdf).

The set of mathematical descriptions for the sample space and probability space of a random variable is called a distribution. From the respective type of random variables, we have discrete and continuous distributions.

Discrete

Using the probability mass function we have direct probabilities for specific values that the discrete random variable can take. This is useful for countable sets of events or measurements that can occur. Discrete distributions can be represented using a line graph by mapping every value [math]\displaystyle{ x\in S }[/math] to its corresponding probability [math]\displaystyle{ P(X=x)=p(x) }[/math].

Common discrete distributions are the Discrete Uniform Distribution, Bernoulli Distribution, Binomial Distribution, Poisson Distribution, and Hypergeometric Distribution.

Continuous

For the continuous case, we have to evaluate the integral of the interval we require to find the probability using the probability density function. These distributions are mainly used for quantities that are known to have an infinite number of values; however, they also find utility in approximating discrete distributions composed of a large population of values. Continous distribution can be represented by graphing the pdf where the area of an interval represents the probability, as well as they can be graphed using the cdf to better see the change in probability from two extreme points of an interval.

The most common used continuous distribution is the Normal Distribution; other common ones are the cousins of the normal distribution: [math]\displaystyle{ \chi^2 }[/math] Distribution, t-Distribution, as well as Continuous Uniform Distribution, Exponential Distribution, and Boltzmann Distribution.

Multivariable

In addition to one random variable distribution, it is possible to have distributions of more than one random variable. These multivariate distributions are called a Joint Probability Distributions of Random Variables

For these distributions, it is then necessary to define a probability for the combination of multiple random variables. In the discrete case, this probability function is called a joint probability mass function. For example, in a deck of cards, for a selection of 4 cards, we could have [math]\displaystyle{ X }[/math] be the number of red cards drawn, while [math]\displaystyle{ Y }[/math] be the number of cards greater than 7. For this two random variable case, the probability would be written as [math]\displaystyle{ P(X=x,Y=y) = p(x,y) }[/math] where [math]\displaystyle{ p(x,y) }[/math] is the joint pmf. Similarly, for the continuous case, we define the joint probability density function. For the probability of a joint pdf we integrate over the rectangular interval needed:

[math]\displaystyle{ P(a\leq X \leq b, c \leq Y \leq d) = \int^{d}_{c}\int^{b}_{a}f(x,y)\;\text{d}x\,\text{d}y }[/math]

or for a region with some constraints:

[math]\displaystyle{ P(X, Y \in R) = \iint_R f(x,y)\;\text{d}x\,\text{d}y }[/math]

Similarly, for the special case of just random variables [math]\displaystyle{ X \text{ and } Y }[/math], the discrete and continuous cases would follow:

[math]\displaystyle{ \sum_{x\in S_X}\sum_{y \in S_Y} p(x,y) = 1 }[/math]

[math]\displaystyle{ \int^{\infty}_{-\infty}\int^{\infty}_{-\infty}f(x,y)\;\text{d}x\,\text{d}y=1 }[/math]

In general, for [math]\displaystyle{ n }[/math] variables we must have that

[math]\displaystyle{ \sum_{x_1 \in S_{X_1}}\dots\sum_{x_n\in S_{X_n}}p(x_1,\dots,x_n) = 1 }[/math], and

[math]\displaystyle{ \int^\infty_{-\infty}\dots\int^\infty_{-\infty}{f(x_1,\dots,x_n)\;\text{d}x_1\dots\text{d}x_n} = 1 }[/math]

Expectation

The expected value of a random variable (also known as mean, average, or expectation) is the weighted average of the possible values the random variable can take according to its probability. The common knowledge is that the average of is defined as [math]\displaystyle{ \bar{x}=\frac{\sum^n_{i=1}x_i}{n} }[/math], yet this is only the special case that all the possible values are equiprobable. In general, we define the expectation of a random variable [math]\displaystyle{ X, E[X], }[/math] as

[math]\displaystyle{ E[X] = \sum_{x\in S}xp(x) }[/math] for the discrete case, and

[math]\displaystyle{ E[X] = \int^\infty_{-\infty}{xf(x)\;\text{d}x} }[/math] for the continuous case.

In physics, specially in Quantum Mechanics, it is more common to see the expectation of a quantity [math]\displaystyle{ X }[/math] written as [math]\displaystyle{ \langle X \rangle }[/math].

Note that we can generalize, this concept of expectation to the expectation of a function [math]\displaystyle{ g(X) }[/math] depending on [math]\displaystyle{ X }[/math] as

[math]\displaystyle{ E[g(X)] = \sum_{x\in S}g(x)p(x) }[/math], and

[math]\displaystyle{ E[g(X)] = \int^\infty_{-\infty}{g(x)f(x)\;\text{d}x} }[/math]

Similarly, for the multivariate case, the dependence on the many variables leads to the expressions:

[math]\displaystyle{ E[g(X_1, \dots, X_n)] = \sum_{x_1 \in S_{X_1}}\dots\sum_{x_n\in S_{X_n}}g(x_1,\dots,x_n)p(x_1,\dots,x_n) }[/math], and

[math]\displaystyle{ E[g(X_1, \dots, X_n)] = \int^\infty_{-\infty}\dots\int^\infty_{-\infty}{g(x_1,\dots,x_n)f(x_1,\dots,x_n)\;\text{d}x_1\dots\text{d}x_n} }[/math]

Variance and Standard Deviation

Variance is defined to be the expectation of the squared distance of a random variable from its average value. Mathematically, that is [math]\displaystyle{ Var(X)=E[(X-E[X])^2]=E[X^2]-(E[X])^2 }[/math]. The standard deviation is just the square root of the variance usually represented as [math]\displaystyle{ \sigma }[/math] such that [math]\displaystyle{ Var(X) = \sigma^2 }[/math]. The standard deviation can be thought of as the average unsigned distance from the mean value; however, it is not a measure of error. Rather, it quantifies the average dispersion/propagation from the mean of a distribution. Only in cases of statistical analysis of properties in the distribution of a population where only a sample is known, the standard deviation can be used alongside the number of samples to define a measure of error called the standard error.

The expression [math]\displaystyle{ \Delta X }[/math] is used in Quantum Mechanics to denote the standard deviation of the random variable [math]\displaystyle{ X }[/math].

Statistical population and samples

In the real world, most of the distributions observed are obscured by the lack of knowledge of the parameters or even the type of distribution a random variable exudes. This is because we may not know the exact shape of the population i.e. the pmf or pdf, or even the exact properties of the population e.g. average, standard deviation, etc.

Statistics defines the population as the complete set of existing data that describes its exact type of distribution and properties; and defines the sample as a specific subset chosen from the population which is used to gather some of the data.

An example would be a deck of cards. The whole population is the deck of cards, while a sample would be choosing a couple of cards. However, the nuance for this example from the real world, is that in most cases, the population may be composed of millions, billions, or possibly infinite of subjects.

It is easy to draw conclusions from population data to sample data, but not the reverse. If the pdf or pmf of a random variable is known, it is easy to determine its expectation and standard deviation for a number of trials, but not in the opposite case.

To distinguish when we are talking about the properties of a sample, we define the sample average [math]\displaystyle{ \bar{x} }[/math] and standard deviation of the sample [math]\displaystyle{ s }[/math], while for the population, population average [math]\displaystyle{ \mu }[/math] and standard deviation of the population [math]\displaystyle{ \sigma }[/math].

Uses

Statistical Mechanics

Two State Paramagnet

A paramagnet is any material that when exposed to an external magnetic field, induces a dipole. In the case of a two state paramagnet, there will be two dipoles induced: an [math]\displaystyle{ \uparrow }[/math] and [math]\displaystyle{ \downarrow }[/math]. Notice, that if there is a two-state paramagnet of [math]\displaystyle{ N }[/math] particles is all the particles that are in an "up state" ([math]\displaystyle{ \uparrow }[/math]) and "down state" ([math]\displaystyle{ \downarrow }[/math]).

[math]\displaystyle{ N = N_{\uparrow} + N_{\downarrow} }[/math]

An important value in all of statistical physics is the multiplicity ([math]\displaystyle{ W }[/math]) of a system that is being studied. The multiplicity of the two state paragmagnetic is analogous to a system of coins since the only states in coins are "heads" and "tails" while in the two state paramagnet is "up". Recall the formula,

[math]\displaystyle{ W(N,n)=\frac{N!}{n!(N-n)!} }[/math]

which is the multiplicity of a system of [math]\displaystyle{ N }[/math] coins of [math]\displaystyle{ n }[/math] number of heads. In the case of the two state paramagnet, [math]\displaystyle{ N }[/math] will represent the number of particles and [math]\displaystyle{ n }[/math] will be replaced with [math]\displaystyle{ N_{\uparrow} }[/math] (or [math]\displaystyle{ N_{\downarrow} }[/math], the same result will occur), resulting in the following equation,

[math]\displaystyle{ W(N,N_{\uparrow})=\frac{N!}{N_{\uparrow}!(N-N_{\uparrow})!} }[/math]

for the multiplicity of a two state paramagnet. Notice that [math]\displaystyle{ N-N_{\uparrow} }[/math] must be the number of [math]\displaystyle{ N_{\downarrow} }[/math] since there are only particles of the microstates [math]\displaystyle{ N_{\uparrow} }[/math] and [math]\displaystyle{ N_{\downarrow} }[/math]. Hence, equation (3) can be written as,

[math]\displaystyle{ W = \frac{N!}{N_{\uparrow}!N_{\downarrow}!} }[/math]

Given the equation the equation of entropy,

[math]\displaystyle{ S = k\ln W }[/math]

where [math]\displaystyle{ k }[/math] is Boltzmann's constant. Substituting [math]\displaystyle{ W }[/math] with equation (4), we find

[math]\displaystyle{ S = k\ln(\frac{N}{N_{\uparrow}!N_{\downarrow}!}) }[/math]

Now, consider an ideal paramagnetic system, where a dipole is either parallel or anti-parallel to the external magnetic field such that dipoles aligned parallel to the field . The energy of an individual magnetic dipole is [math]\displaystyle{ U=\vec{\mu}\cdot\vec{B} }[/math] where [math]\displaystyle{ \mu }[/math] is the magnetic moment which is constant. Assume that we have an upward external magnetic field, in such case, upward dipoles will have a negative energy ([math]\displaystyle{ U_{\uparrow} }[/math]) and downward dipoles will have a positive energy ([math]\displaystyle{ U_{\downarrow} }[/math]). Rewriting the original [math]\displaystyle{ U }[/math] in the form [math]\displaystyle{ U=\mu B\cos\theta }[/math] where theta will be [math]\displaystyle{ \pi }[/math], hence the energy for an individual upward facing dipole is [math]\displaystyle{ -\mu B }[/math]. Since there are [math]\displaystyle{ N_{\uparrow} }[/math] upward dipoles, the total upward energy is [math]\displaystyle{ U_{\uparrow}=-\mu BN_{\uparrow} }[/math]. A similar argument can be made for the total downward dipole energy such that [math]\displaystyle{ \theta=0 }[/math] because the downward dipole is anti-parallel resulting in [math]\displaystyle{ U_{\downarrow}=\mu BN_{\downarrow} }[/math]. The sum of these quantities gives the total energy of the system [math]\displaystyle{ U=\mu B(N_{\downarrow}-N_{\uparrow}) }[/math].

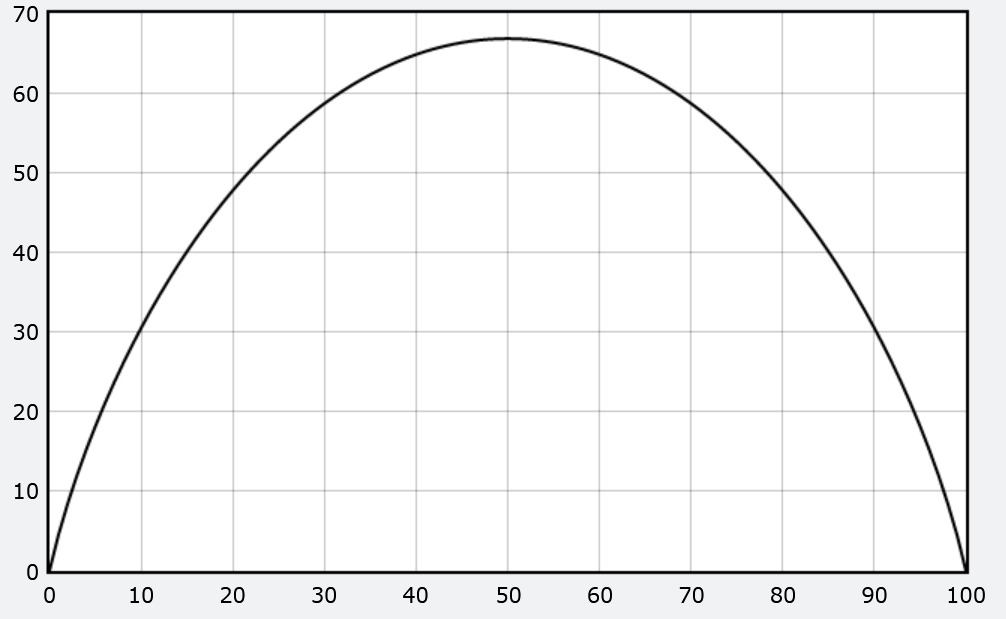

Graph of two state paramagnet of 100 particles where the [math]\displaystyle{ y }[/math]-axis represents the entropy divided by [math]\displaystyle{ k }[/math] for scale. Notice that the shape is nearly parabolic because as [math]\displaystyle{ N_{\uparrow} }[/math], the number of available microstates increases. However, after a certain point, the number of available microstates decreases which results in a decreasing entropy with respect to the number of "up" dipoles. If you like, you can think of the system as being less "orderly" as it approaches [math]\displaystyle{ 50 }[/math] on the [math]\displaystyle{ x }[/math]-axis and becoming more "orderly" when the system approaches [math]\displaystyle{ 0 }[/math] or [math]\displaystyle{ 100 }[/math]. https://www.glowscript.org/#/user/Luigi/folder/Private/program/EntropyParamag

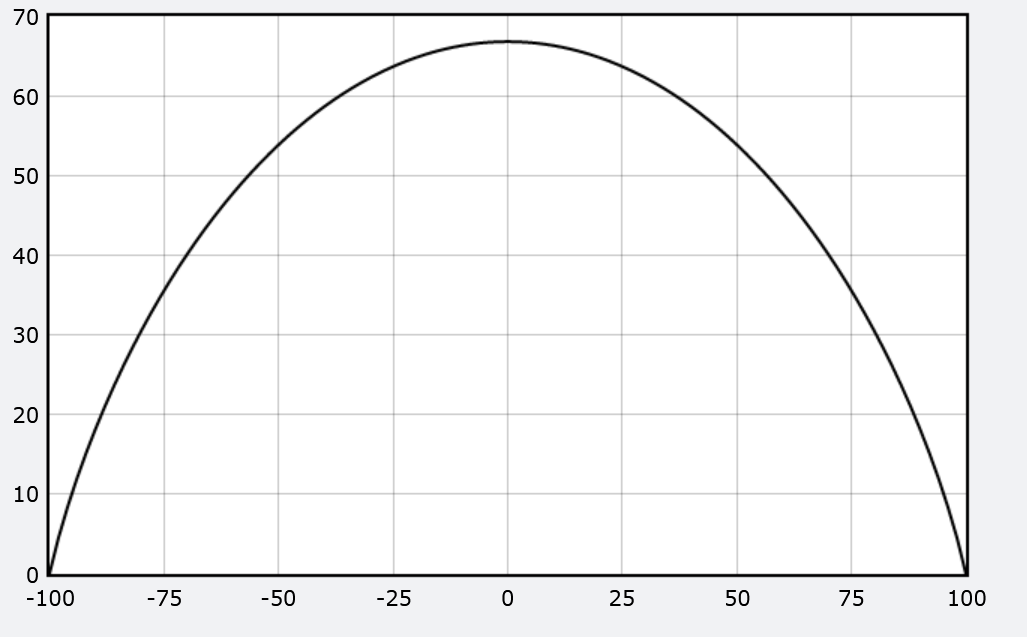

When discussing paramagnets, it is useful to consider the magnetization. Magnetization ([math]\displaystyle{ M }[/math]), in this context, can be defined as the net magnetic moment: [math]\displaystyle{ M=\mu (N_{\uparrow}-N_{\downarrow})=-\frac{U}{B} }[/math]. Using magnetization results in figure below.https://www.glowscript.org/#/user/Luigi/folder/MyPrograms/program/paramagenergy

To find the temperature of a magnetic system, one must consider a large number of dipoles [math]\displaystyle{ N }[/math] along with the relation [math]\displaystyle{ \frac{1}{T}=(\frac{\partial S}{\partial U}) }[/math]. By chain rule [math]\displaystyle{ \frac{\partial S}{\partial U}= \frac{\partial N_{\uparrow}}{\partial U}\frac{\partial S}{\partial N_{\uparrow}} }[/math]. To find [math]\displaystyle{ \frac{\partial N_{\uparrow}}{\partial U} }[/math],

[math]\displaystyle{ U = \mu B (N_{\downarrow}-N_{\uparrow})=\mu B(N-2N_{\uparrow})\implies }[/math]

[math]\displaystyle{ N_{\uparrow}=\frac{N}{2}-\frac{U}{2\mu B} }[/math]

[math]\displaystyle{ \frac{\partial N_{\uparrow}}{\partial U}=\frac{-1}{2\mu B}. }[/math]

Then to find [math]\displaystyle{ \frac{\partial S}{\partial N_{\uparrow}} }[/math], one must apply the Stirling approximation ([math]\displaystyle{ \ln N! = N\ln N - N }[/math]). Consider that [math]\displaystyle{ \frac{S}{k}=\frac{N!}{N_{\uparrow}!(N-N_{\uparrow})!} }[/math].

[math]\displaystyle{ \frac{S}{k}=\ln N! - \ln N_{\uparrow}! - ln(N-N_{\uparrow})! }[/math] Apply Stirling approximation.

[math]\displaystyle{ \frac{S}{k}= N\ln N - N -(N_{\uparrow}\ln N_{\uparrow}-N_{\uparrow})-[(N-N_{\uparrow})\ln(N-N_{\uparrow})-(N-N_{\uparrow})] }[/math]

[math]\displaystyle{ \frac{1}{k}\frac{\partial S}{\partial N_{\uparrow}}=\ln\frac{N-N_{\uparrow}}{N_{\uparrow}} }[/math][1].

Using the definitions of magnetization established,

[math]\displaystyle{ \mu (2N_{\uparrow}-N)=\frac{U}{B}\implies }[/math]

[math]\displaystyle{ N_{\uparrow}=\frac{-U+N\mu B}{2\mu B}. }[/math]

Substituting back into [1].

[math]\displaystyle{ \frac{1}{k}\frac{\partial S}{\partial N_{\uparrow}}=\ln(\frac{N}{(\frac{U-N\mu B}{2\mu B})}-1)=\ln(\frac{N+\frac{U}{\mu B}}{N-\frac{U}{\mu B}}) }[/math]

[math]\displaystyle{ \frac{\partial S}{\partial N_{\uparrow}}=k\ln(\frac{N+\frac{U}{\mu B}}{N-\frac{U}{\mu B}}) }[/math]

This results in [math]\displaystyle{ \frac{1}{T}=\frac{k}{2\mu B}\ln(\frac{N-\frac{U}{\mu B}}{N+\frac{U}{\mu B}}) }[/math].

Exercise

Derive the magnetization of a two state paramagnet in terms of [math]\displaystyle{ T }[/math] (Hint: [math]\displaystyle{ \tanh x = \frac{{e}^{x}-{e}^{-x}}{{e}^{x}+{e}^{-x}} }[/math]). What happens when the magnetization as temperature approaches infinity? Draw a graph of how magnetization changes with respect to temperature.

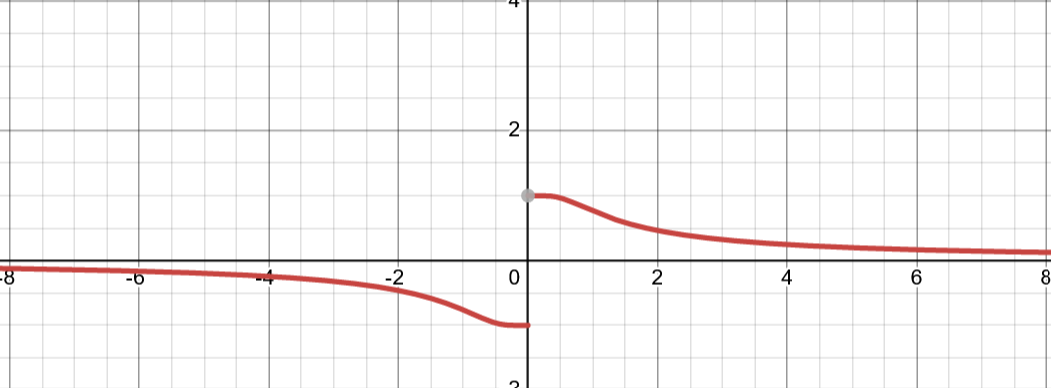

Let [math]\displaystyle{ \frac{1}{T}=\frac{k}{2\mu B}\ln(\frac{N-\frac{U}{\mu B}}{N+\frac{U}{\mu B}} }[/math]). Rearranging and exponentiating, we find [math]\displaystyle{ U=N\mu B(\frac{1-{e}^{\frac{2\mu B}{kT}}}{1+{e}^{\frac{2\mu B}{kT}}}) }[/math]. By using the identity for hyperbolic tangent, [math]\displaystyle{ U = -N\mu B \tanh(\frac{\mu B}{kT}) }[/math]. Then, by definition of magnetization, [math]\displaystyle{ M = -\frac{U}{B}=N\mu\tanh(\frac{\mu B}{kT}) }[/math].

As [math]\displaystyle{ T\rightarrow\infty }[/math], magnetization goes to [math]\displaystyle{ 0 }[/math]. The graph should appear as the following, where temperature is the [math]\displaystyle{ x }[/math]-axis.

Quantum Physics

Statistics is used all over the place in Quantum Mechanics to define probabilities to certain energy states, expectations of the position and momentum of a particle, probability densities to the electron orbitals of an atom, and much more.

First and foremost, the Normalization Condition is a mathematical requirement for quantum states to define the probability of a state as

[math]\displaystyle{ P(\psi\to\phi) = |\langle \phi | \psi \rangle|^2 }[/math] such that they satisfy the definition of probability:

[math]\displaystyle{ \sum^n_{i=0}|\langle \phi_i | \psi \rangle|^2 = 1 }[/math] This is for the case that there are discrete measurable states where the particle can be found.

Supposing the Simple Harmonic Oscillator case for a quantum particle, if [math]\displaystyle{ |\psi \rangle }[/math] is the prepared state of a particle, we can describe its state in terms of all the possible energy eigenstates for the particle to be found:

[math]\displaystyle{ |\psi \rangle = \sum^\infty_{i=0} \langle E_i | \psi \rangle | E_i \rangle }[/math]

To find the probability in energy state [math]\displaystyle{ E_i }[/math] is necessary to just compute [math]\displaystyle{ |\langle E_i | \psi \rangle|^2 }[/math].

If we wish to find the average energy the particle is found in, we just need to compute the expectation for these states: [math]\displaystyle{ \langle E \rangle = \sum^\infty_{i=0} E_i |\langle E_i | \psi \rangle |^2 }[/math]

However, if one wishes to find the position of a particle, from the fact that the position is continuous, it is not possible to use the previous definition of probability. Using the trick of defining probability to an interval, it is possible to write that the probability of finding a particle in a section of the space is given by:

[math]\displaystyle{ P(a\leq x \leq b) = \int^b_a |\langle x | \psi \rangle|^2 \text{d}x }[/math] Note that if we call the Wave Function,[math]\displaystyle{ \psi(x) = \langle x | \psi \rangle }[/math], then [math]\displaystyle{ P(a\leq x \leq b) = \int^b_a \psi(x) \psi^*(x) \text{d}x = \int^b_a |\psi(x)|^2 \text{d}x }[/math]

It is important to notice that here [math]\displaystyle{ |\psi(x)|^2 }[/math] is the probability density function of position. By the normalization requirement, we must also have [math]\displaystyle{ \int^\infty_{-\infty} |\psi(x)|^2 \text{d}x = 1 }[/math]

For the average position, it's just necessary to compute the expectation of [math]\displaystyle{ x }[/math]: [math]\displaystyle{ \langle x \rangle = \int^\infty_{-\infty} x |\psi(x)|^2 \text{d}x }[/math]

It is even possible to obtain the uncertainty in the position which is just the population standard deviation of position: [math]\displaystyle{ \Delta x = \sqrt{\langle x^2 \rangle - \langle x \rangle^2} }[/math]

Physics Simulations

Sometimes it is possible to create models of important physical situations mathematically, which using the proper equations, an analytical solution is obtained. However, this is very rarely the case for more complex systems. N-body problems are only able to be approximated using computational simulations and it is similarly the case for the analysis of chaotic systems.

In addition, the excessive amount of information, such as position, velocity, momentum, energy, forces, etc., make it impossible for a viable complete analysis of the system. This is why it is needed a statistical approach, in which the main consideration is on macro-observable properties such as average energy, temperature, pressure, average speed, etc. As these are not exact, they are analyzed in a statistical manner by finding distributions in the microstates of the systems, calculating averages, and finding the uncertainties in these descriptions.

This is one example of the use of statistics in a physics simulation: link

Experiments and hypothesis testing

As a scientific process, it is necessary to put through rigorous tests all physical theories by comparing theoretical expectations with observations in experiments. However, due to random errors in experiments and other sources, as well as the recollection of finite data, this is not as simple as testing if a number matches.

For this reason, statistics provides a tool called confidence intervals which is a range of estimation for a parameter dependent on the amount of sample information. Then, by defining a null hypothesis for the experiment verified against these confidence intervals, we estimate the probability that a theory is not true given the data from experiments.

This mathematical approach helps test new theories with experiments by considering possible factors in a quantitative manner, rather than a binary match or mismatch.

History

Statistical methods have become foundational in physics, transforming how scientists interpret data, build models, and make predictions about the natural world. From understanding the motion of atoms in a gas to analyzing signals from deep space, statistics enables physicists to tackle complexity and uncertainty. This timeline of ideas and applications shows how deeply statistics is embedded in the structure of physical theory.

Statistical Mechanics

The key contributors in statistical mechanics include James Clerk Maxwell, Ludwig Boltzmann, and J. Willard Gibbs. Statistical mechanics emerged in the 19th century to address problems classical mechanics couldn’t handle—particularly systems with an enormous number of particles, such as gases. Rather than tracking each particle’s path, physicists used probability distributions to describe average behavior across many particles.

Maxwell introduced the velocity distribution of gas particles, showing that temperature reflects average kinetic energy. Boltzmann expanded on this with his H-theorem, proposing that systems evolve toward equilibrium by increasing entropy—a measure of statistical disorder. Gibbs generalized these ideas with ensemble theory, offering a formal way to calculate thermodynamic quantities by averaging over possible microstates.

These ideas unified microscopic physics with macroscopic thermodynamics, allowing derivations of laws like the ideal gas law and explaining phenomena such as heat capacity, diffusion, and phase transitions.

Quantum Mechanics and Quantum Statistics

Quantum mechanics radically reshaped physics in the 20th century by introducing inherent probabilism. Instead of deterministic trajectories, particles are described by wavefunctions, and observables are linked to probability amplitudes. According to the Born rule, the probability of finding a particle in a given state equals the square of the wavefunction’s magnitude.

Fermi-Dirac statistics describe indistinguishable particles such as electrons, which obey the Pauli exclusion principle. In contrast, Bose-Einstein statistics describe bosons like photons, which can occupy the same state and form condensates at low temperatures. These statistical tools explain atomic structure, semiconductors, and quantum phenomena like superfluidity and superconductivity.

Thermodynamics and Entropy

The concept of entropy gained deep statistical meaning through Boltzmann’s famous equation, which connects entropy to the number of microstates consistent with a system’s macrostate.

This equation explains why irreversible processes occur and gives rise to the arrow of time. The statistical interpretation of entropy also bridges thermodynamics and information theory, where entropy represents missing information or uncertainty. Today, entropy is fundamental in fields ranging from black hole physics to biology and computing.

Nuclear and Particle Physics

High-energy physics experiments rely heavily on statistics to distinguish real signals from background noise. Researchers use p-values, confidence intervals, and Bayesian inference to evaluate experimental results. For example, the 2012 discovery of the Higgs boson required a 5-sigma significance level, meaning the probability of a false positive was less than 1 in 3.5 million. Physicists also use Monte Carlo simulations, detector efficiency modeling, and hypothesis testing to interpret data from particle collisions.

Cosmology and Astrophysics

Cosmologists analyze large-scale structure and signals from space using a range of statistical methods. Cosmic microwave background (CMB) fluctuations are analyzed using power spectra, while galaxy clustering is studied through correlation functions. Gravitational wave detections rely on signal processing and Bayesian inference to extract weak signals from noisy data. Advanced tools such as Markov Chain Monte Carlo (MCMC) sampling help estimate parameters like the Hubble constant and test cosmological models.

Data Analysis and Experimental Uncertainty

All physics experiments require rigorous error analysis. Physicists rely on tools such as standard deviations, uncertainty propagation, and curve fitting to interpret results.

It is essential to distinguish between random and systematic errors, evaluate the reliability of measurements, and understand the statistical significance of results. From undergraduate labs to large-scale collaborations, statistical literacy is fundamental to experimental science.

References

See Also

Quantum Tunneling through Potential Barriers

Heisenberg Uncertainty Principle

Particle in a 1-Dimensional box

Additional Resources

Book: Introduction to Probability and Statistics for Engineers and Scientists