Entropy

The Main Idea

The colloquial definition of entropy is "the degree of disorder or randomness in the system" (Merriam). The scientific definition, while significantly more technical, is also significantly less vague. Put simply entropy is a measure of the number of ways to distribute energy to one or more system, the more ways to distribute the energy the more entropy a system has.

A system in this case is a particle or group of particles, usually atoms, and the energy is distributed in specific quanta denoted by q (See mathematical model for details calculating q for different atoms). How can energy be distributed to a single atom? The atom can store energy in discrete levels in each of the spatial directions it can move, namely the x, y, and z directions. It is helpful to visualize this phenomena by picturing every atom to have three bottomless wells and each quanta of energy as being a ball that is sorted into these wells. Thus an atom can store one quanta of energy in three ways, with one direction or well having all the energy and the other two having none. Furthermore, there are 6 ways of distributing 2 quanta to one atom, 3 distributions with 1 well having all the energy, as well as 3 where 2 wells each have 1 quanta of energy. One system can consist of more than one atom. For example, there are 6 possible distributions of 1 quanta of energy to 2 atoms or 21 possible distributions of 2 quanta to 2 atoms. The number of possible distributions rises rapidly with larger energies and more atoms. The quanta available for a system or group of systems is known as the macrostate, and each possible distribution of that energy through the system or group of systems is a microstate. In the previous 2 atom example q = 2 is the macrostate and each of the 21 possible distributions this system has is one of the system's microstates.

The number of possible distributions for a certain quanta of energy q, is denoted by Ω (see mathematical model for how to calculate Ω). To find the number of ways energy can be distributed to 2 systems, simply multiply each system's respective value for Ω.

The Fundamental Assumption of Statistical Mechanics states over time every single microstate of an isolated system is equally likely. Thus if you were to observe 2 quanta distributed to 2 atoms, you are equally likely to observe any of its 21 possible microstates. While this idea is called an assumption it also agrees with experimental results. This principle is very useful when calculating the probability of different divisions of energy. For example, if 4 quanta of energy are shared between two atoms the distribution is as follows.

There are 36 different microstates in which the 4 quanta of energy are shared evenly between the the two atoms and 126 different microstates total. Given the Fundamental Assumption of Statistical Mechanics the most probably distribution is simply the distribution with the most microstates. As an even split has the most microstates, it is the most probable distribution. 29% of the microstates have an even split, corresponding to a 29% chance of finding the energy distributed this way.

As the total number of quanta and atoms in the two systems increases, the number of microstates balloons in size at a nearly comical rate. When the number of quanta, as well as the number of atoms both systems have reaches the 100's, the total number of microstates is comfortably above [math]\displaystyle{ 10^100 }[/math].

The study of microscopic distributions of energy is extremely useful in explaining macroscopic phenomena, from a bouncing rubber ball to the temperatures of 2 adjacent objects.

A Mathematical Model

The goal of these formulas is to be able to calculate heat and temperature of certain objects:

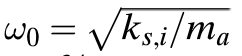

Here is the formula to calculate Einstein's model of a solid:

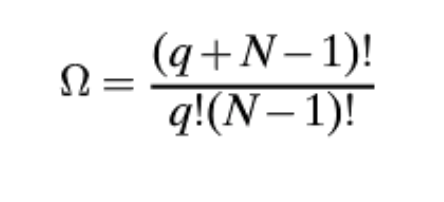

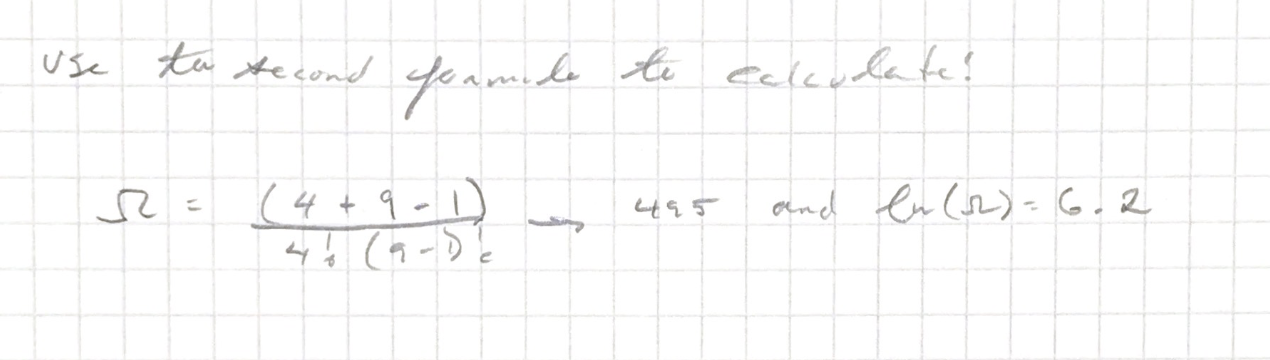

Here is a formula to calculate how many ways there are to arrange q quanta among n one-dimensional oscillators:

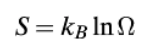

From this you can directly calculate Entropy (S):

Where (The Boltzmann constant) Kb = 1.38 e -23

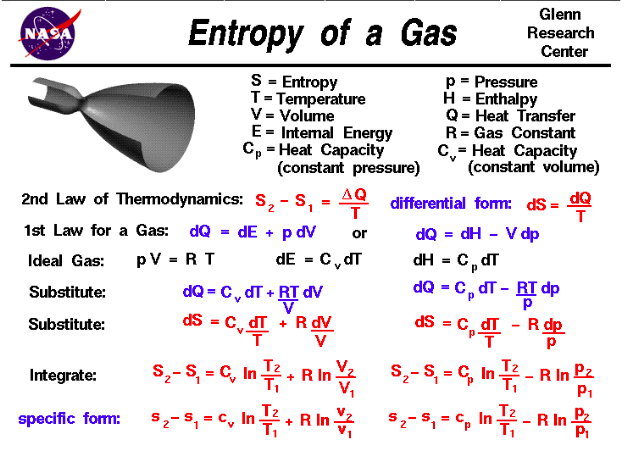

The following is an image from NASA's website describing the entropy of a gas and the derivation of the specific form of the equation describing entropy found from manipulating the second law of thermodynamics:

A Computational Model

How to calculate entropy with given n and q values:

https://trinket.io/embed/glowscript/c24ad7936e

Examples

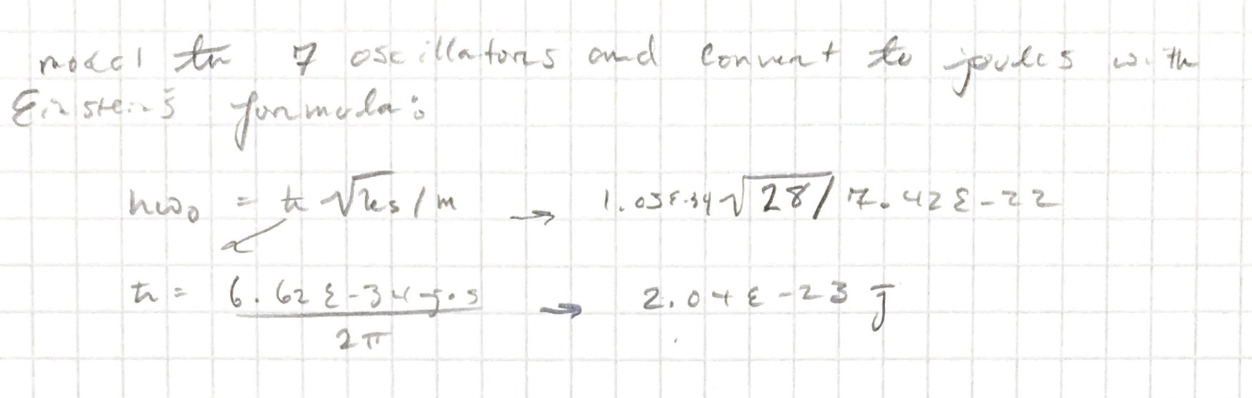

Given the interatomic spring stiffness of copper is 28 N/m, answer the following:

Simple

1)

For a nanoparticle consisting of 7 copper atoms, how many joules are there in the cluster of nano-particles?

__________________________________________________________________________________________________________________________________________________

2)

Given:

delta H fusion: x kJ/mol

T fusion: y degrees C

delta H vaporization: 10x kJ/mol

T vaporization: 10y degrees C

Which of the following is true?

a) change in entropy of fusion = change in entropy of vaporization

b) change in entropy of fusion > change in entropy of vaporization

c) change in entropy of fusion < change in entropy of vaporization

answer: C

delta S fusion: delta H fusion / T fusion : x / (y + 273)

- when T is in kelvin

delta S vaporization: delta H vaporization / T vaporization: 10x/ (10y : 273)

- when T is in kelvin

so x / (y + 273) < 10x/ (10y : 273) and delta S fusion < delta S vaporization

_____________________________________________________________________________________________________________________________________________________

Middling

What is the values of omega for 4 quanta of energy in the nano-particle?

Difficult

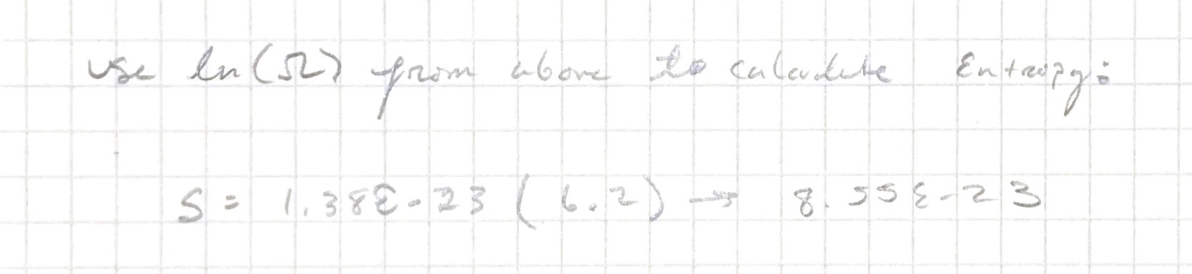

Calculate the entropy of the system given the answer from the previously calculated omega.

Connectedness

In my research I read that entropy is known as time's arrow, which in my opinion is one of the most powerful denotations of a physics term. Entropy is a fundamental law that makes the universe tick and it is such a powerful force that it will (possibly) cause the eventual end of the entire universe. Since entropy is always increases, over the expanse of an obscene amount of time the universe due to entropy will eventually suffer a "heat death" and cease to exist entirely. This is merely a scientific hypothesis, and though it may be gloom, an Asimov supercomputer Multivac may finally solve the Last Question and reboot the entire universe again.

The study of entropy is pertinent to my major as an Industrial Engineer as the whole idea of entropy is statistical thermodynamics. This is very similar to Industrial Engineering as it is essentially a statistical business major. Though the odds are unlikely that entropy will be directly used in the day of the life of an Industrial Engineer, the same distributions and concepts of probability are universal and carry over regardless of whether the example is of thermodynamic or business.

My understanding of quantum computers is no more than a couple of wikipedia articles and youtube videos, but I assume anything along the fields of quantum mechanics, which definitely relates to entropy, is important in making the chips to withstand intense heat transfers, etc.

History

The first person to give entropy a name was Rudolf Clausius. He questioned in his work the amount of usable heat lost during a reaction, and contrasted the previous view held by Sir Isaac Newton that heat was a physical particle. Clausius picked the name entropy as in Greek en + tropē means "transformation content."

The concept of Entropy was then expanded on by mainly Ludwig Boltzmann who essentially modeled entropy as a system of probability. Boltzmann gave a larger scale visualization method of an ideal gas in a container; he then stated that the logarithm of each of the micro-states each gas particle could inhabit times the constant he found was the definition of Entropy.

In this way Entropy came from an idea expounding terms of thermodynamics to a statistical thermodynamics which has many formulas and ways of calculation.

Regarding the relationship between entropy and the laws of thermodynamics, the second law of thermodynamics implies that the only processes that occur naturally while satisfying the first law of thermodynamics are those that do not decrease entropy (in other words those that increase or keep entropy the same). There is therefore no physical process that decreases the (universal) entropy. In essence, the universe is perpetually approaching a higher state of entropy, hence the saying the universe tends towards chaos.

See also

Here are a list of great resources about entropy that make it easier to understand, and also help expound more on the details of the topic.

Further reading

External links

Great TED-ED on the subject:

References

- http://gallica.bnf.fr/ark:/12148/bpt6k152107/f369.table

- http://www.panspermia.org/seconlaw.htm

- https://ed.ted.com/lessons/what-is-entropy-jeff-phillips

- https://www.merriam-webster.com/dictionary/entropy

- https://www.grc.nasa.gov/www/k-12/airplane/entropy.html

- https://oli.cmu.edu

- Chabay, Ruth W., and Bruce A. Sherwood. Matter & Interactions. John Wiley & Sons, 2015.